sequenceDiagram

participant User

participant AI

participant VectorRepo

participant Vectorize Service

User->>AI: Ask question

AI->>VectorRepo: Search for content

VectorRepo-->>AI: Error: Vectorized repo not found

AI->>Vectorize Service: Vectorize repository

Vectorize Service-->>AI: Repo vectorized, basic info returned

AI->>VectorRepo: Search for content again

VectorRepo-->>AI: Search results

AI->>User: Return curated results

Using Athena to look up obscure implementation details

I’ve recently been creating a plugin for Reflex that integrates Clerk user authentication. Part of this involved setting up some fiddly details that understandably aren’t well documented in the Reflex docs. So I turned to Athena to help me find the relevant code within the Reflex repository so that I didn’t end up duplicating their work unnecessarily, and it took all of 10 seconds to find what I was looking for, and another few seconds to get all the context I needed to finish my task.

The task

I want to allow users (other reflex developers) to pass in props via type annotated snake_case arguments (as is the norm in python), but the react component I am wrapping needs the arguments translated into camelCase.

The problem

This is an easy enough problem to solve, but it has surely been solved long ago somewhere in the Reflex repository too. A quick search of the reflex docs doesn’t bring up anything obvious though. So what now?

The old way

Start grepping my way through the reflex repository looking for places that snake and camel are mentioned. It’s not terrible, but it’s a bit of a PITA… Particularly if your search terms bring up hundreds of unrelated results or none at all.

The Athena way

Instead, I decided to ask my personal LLM assistant “Athena”.

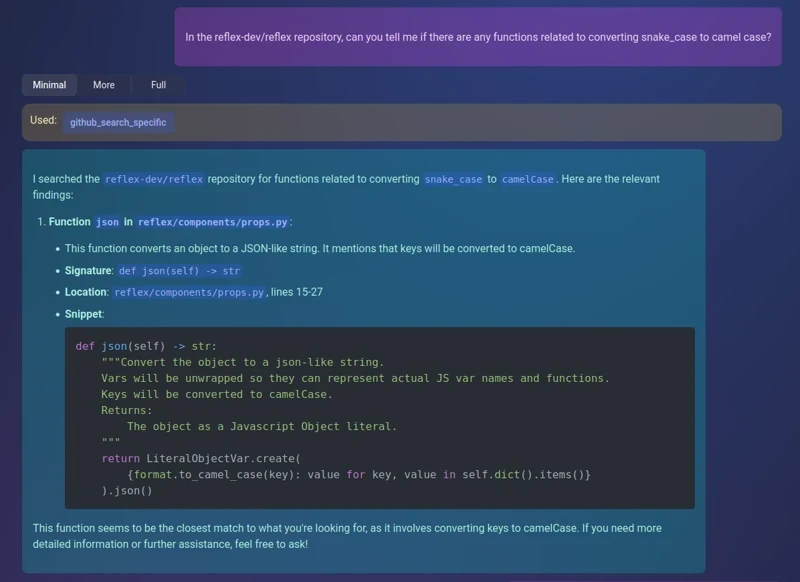

In the reflex-dev/reflex repository, can you tell me if there are any functions related to converting snake_case to camel case?

In response, Athena decides to carry out this search via the github_search_specific tool (one of many custom tools I have built), passing these arguments:

{

"repo_owner":"reflex-dev",

"repo_name":"reflex",

"query_string":"function to convert snake_case to camelCase",

"query_embedding_type":"text"

}There are a bunch of additional parameters that could have been specified for a more refined search, but for an initial search, it makes sense to use their sensible defaults (saving output tokens).

This tool is specifically good at finding chunks of code based on similarity to a description when using the "text" embedding type, so that was a good choice.

Part of the magic here is that when vectorizing the repository, I make sure that each chunk of code has several embeddings optimized for different types of searching (code snippets, function names, description, etc.)

This search takes a fraction of a second, returning only the top few most similar chunks of code from the entire repository (out of a total of 11,000 possible chunks) – No need to waste inefficient LLM computation time feeding in all that context every time!

I’ve previously asked questions about the Reflex repository, so Athena already has it vectorized… Otherwise, the tool call would have returned an error indicating that it hadn’t been vectorized yet, and Athena could handle that and call another tool that carries out that initial vectorization first.

The general workflow here looks something like:

Even though this very efficiently narrows down the search, the results aren’t necessarily going to be great, so I don’t want to have to sift through them myself. Instead, Athena picks up the results and correctly identifies that the first few aren’t actually relevant, and singles out the one that is. It then returns that to me with some additional information about where the code snippet came from:

I searched the reflex-dev/reflex repository for functions related to converting snake_case to camelCase. Here are the relevant findings:

Function

jsoninreflex/components/props.py:This function converts an object to a JSON-like string. It mentions that keys will be converted to camelCase.

Signature:

def json(self) -> strLocation:

reflex/components/props.py, lines 15-27Snippet:

def json(self) -> str: """Convert the object to a json-like string. Vars will be unwrapped so they can represent actual JS var names and functions. Keys will be converted to camelCase. Returns: The object as a Javascript Object literal. """ return LiteralObjectVar.create( {format.to_camel_case(key): value for key, value in self.dict().items()} ).json()

This function seems to be the closest match to what you’re looking for, as it involves converting keys to camelCase. If you need more detailed information or further assistance, feel free to ask!

This is exactly the sort of thing I was looking for!

Even though I can now see exactly where this chunk is located, I don’t want to have to go there and look. So I ask:

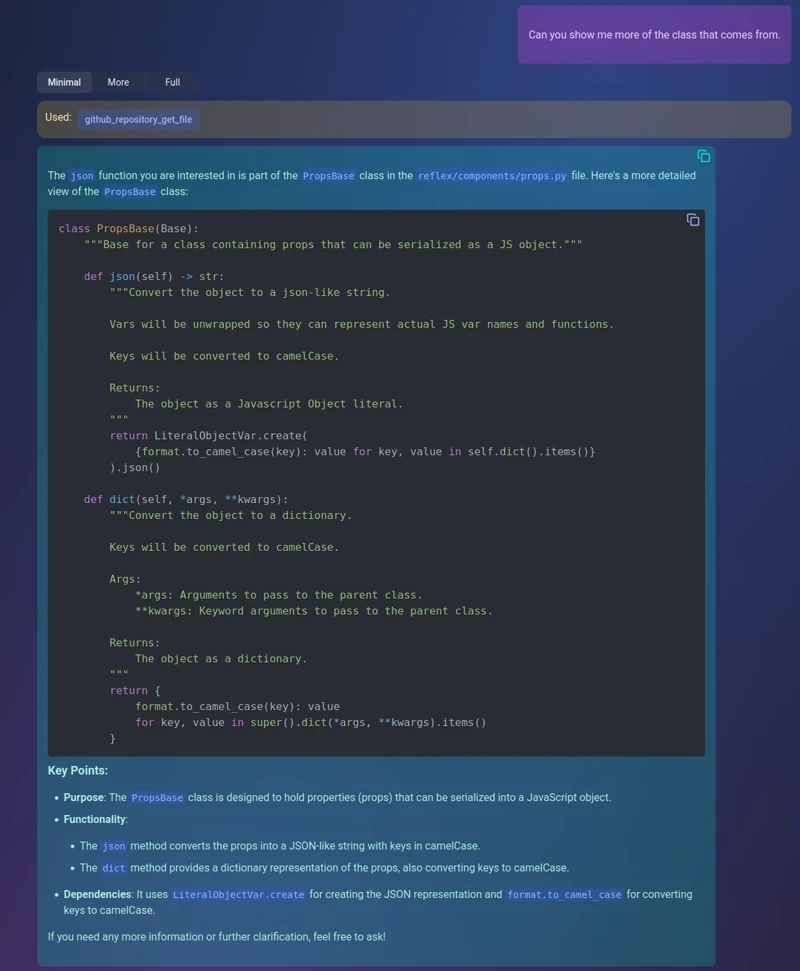

Can you show me more of the class that comes from.

This time, Athena chooses to use a different tool, github_repository_get_file, since it can also see from the previous message where this snippet came from.

{"repo_owner":"reflex-dev","repo_name":"reflex","file_path":"reflex/components/props.py"}With the extra info returned, Athena gives me just the parts I need.

The json function you are interested in is part of the PropsBase class in the reflex/components/props.py file. Here’s a more detailed view of the PropsBase class:

class PropsBase(Base):

"""Base for a class containing props that can be serialized as a JS object."""

def json(self) -> str:

"""Convert the object to a json-like string.

Vars will be unwrapped so they can represent actual JS var names and functions.

Keys will be converted to camelCase.

Returns:

The object as a Javascript Object literal.

"""

return LiteralObjectVar.create(

{format.to_camel_case(key): value for key, value in self.dict().items()}

).json()

def dict(self, *args, **kwargs):

"""Convert the object to a dictionary.

Keys will be converted to camelCase.

Args:

*args: Arguments to pass to the parent class.

**kwargs: Keyword arguments to pass to the parent class.

Returns:

The object as a dictionary.

"""

return {

format.to_camel_case(key): value

for key, value in super().dict(*args, **kwargs).items()

}Key Points:

- Purpose: The

PropsBaseclass is designed to hold properties (props) that can be serialized into a JavaScript object. - Functionality:

- The

jsonmethod converts the props into a JSON-like string with keys in camelCase. - The

dictmethod provides a dictionary representation of the props, also converting keys to camelCase.

- The

- Dependencies: It uses

LiteralObjectVar.createfor creating the JSON representation andformat.to_camel_casefor converting keys to camelCase.

If you need any more information or further clarification, feel free to ask!

So this PropsBase class is actually what I should have been looking for in the first place, and now I can just use that directly instead of the pydantic BaseModel I had first used.

Conclusion

Obviously, this task was a pretty simple one, but also the kind of thing that can easily soak up minutes when those initial grep searches don’t find what you want (and this is after you’ve got the repository cloned and a new terminal window in the right place etc.).

Working with Athena on this instead, I wrote two short sentences, waited \(<15~\text{s}\) total for responses, and had exactly what I needed displayed right to me. This for me is where AI tools shine…

Next steps

You could also imagine this sort of task being useful within a larger AI driven workflow… Had I given Athena a much larger development task to implement for me, a logical first step might involve gathering the most relevant context related to several different ideas.

Well, Athena is ready for that – rather than doing the github searches directly, it could instead decide to call several sub-assistants simultaneously, each provided a smaller part of the task. Those sub-assistants can additionally be more finely tuned toward the specific tasks they are given (like github searching, or code generation, etc.) and use different LLM models or settings to further increase quality and efficiency. They then return only the curated most useful results from their workflows to the main assistant so that the main assistant can use that information to proceed with the larger task.